Apple Visual Intelligence in iOS 26 was expected to make a significant leap at WWDC 2025. When Craig Federighi, Apple’s senior VP of software engineering, introduced it, many hoped it would evolve from a passive camera tool into something more dynamic and interactive. While new features were revealed, such as integration into the screenshot interface, the update feels more like an incremental step rather than a revolutionary leap in artificial intelligence.

To be fair, the new Apple Visual Intelligence options are useful. You no longer have to switch apps or upload photos manually to analyze visuals. Now, when you take a screenshot, options like “Ask” and “Search” appear right on the screen. The “Ask” button routes the image through ChatGPT, while “Search” performs an on-device scan. It’s seamless, it’s secure, and it saves time. But it’s not groundbreaking.

This feature functions well across default iOS apps. You can draw a circle around a shirt in a photo, and Apple Visual Intelligence will recognize it, then direct you to its product page or a related search result. Apple is also releasing an API for developers to make third-party apps compatible, which could expand its practical applications. That’s a welcome improvement for users who prefer everything to stay within the Apple ecosystem.

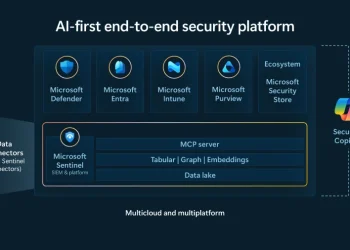

Still, something is missing. Compared to Google’s Gemini Live or Microsoft’s Copilot Vision, Apple’s tool feels passive. It can’t engage in real-time voice conversations about what the camera sees. Gemini Live lets you point your camera at a tree, identify it, and continue asking questions in a natural flow. That conversational capability makes the feature feel genuinely smart and futuristic—something that Apple Visual Intelligence hasn’t achieved yet.

Apple’s restraint is likely due to its commitment to on-device AI and user privacy. Most of Gemini’s power comes from cloud-based processing, while Apple prefers to limit what data leaves the device. The trade-off is noticeable. Despite advances in Private Cloud Compute, Apple’s privacy-focused strategy restricts the scope of what Apple Visual Intelligence can offer today.

The foundation is promising. Visual analysis is fast, tightly integrated into the OS, and respects privacy boundaries. These are significant advantages in a world where trust in AI is fragile. Yet the absence of natural interaction and voice feedback makes the experience feel dated when compared with competitors that are not shackled by the same privacy framework.

In its current form, Apple Visual Intelligence is a reliable, secure, and useful assistant. But it still lacks the “wow” factor—those moments when technology feels magical. With competitors innovating at a rapid pace, Apple will need to find a way to push further, ideally without compromising its principles. Until then, Visual Intelligence will remain a helpful but limited tool in iOS 26.